Voluntarily sharing informative posts from unaffiliated sources.

- 40 Posts

- 0 Comments

Joined 8M ago

Cake day: Jan 16, 2024

You are not logged in. If you use a Fediverse account that is able to follow users, you can follow this user.

For Android users seeking a privacy-focused browser, [Privacy Guides](https://www.privacyguides.org/en/mobile-browsers/#mull) recommends Mull:

>Mull is a privacy oriented and deblobbed Android browser based on Firefox. Compared to Firefox, it offers much greater fingerprinting protection out of the box, and disables JavaScript Just-in-Time (JIT) compilation for enhanced security. It also removes all proprietary elements from Firefox, such as replacing Google Play Services references.

>Mull enables many features upstreamed by the Tor uplift project using preferences from Arkenfox. Proprietary blobs are removed from Mozilla's code using the scripts developed for Fennec F-Droid.

- @ForgottenFlux@lemmy.world to

English

English - •

- tuta.com

- •

- 1M

- •

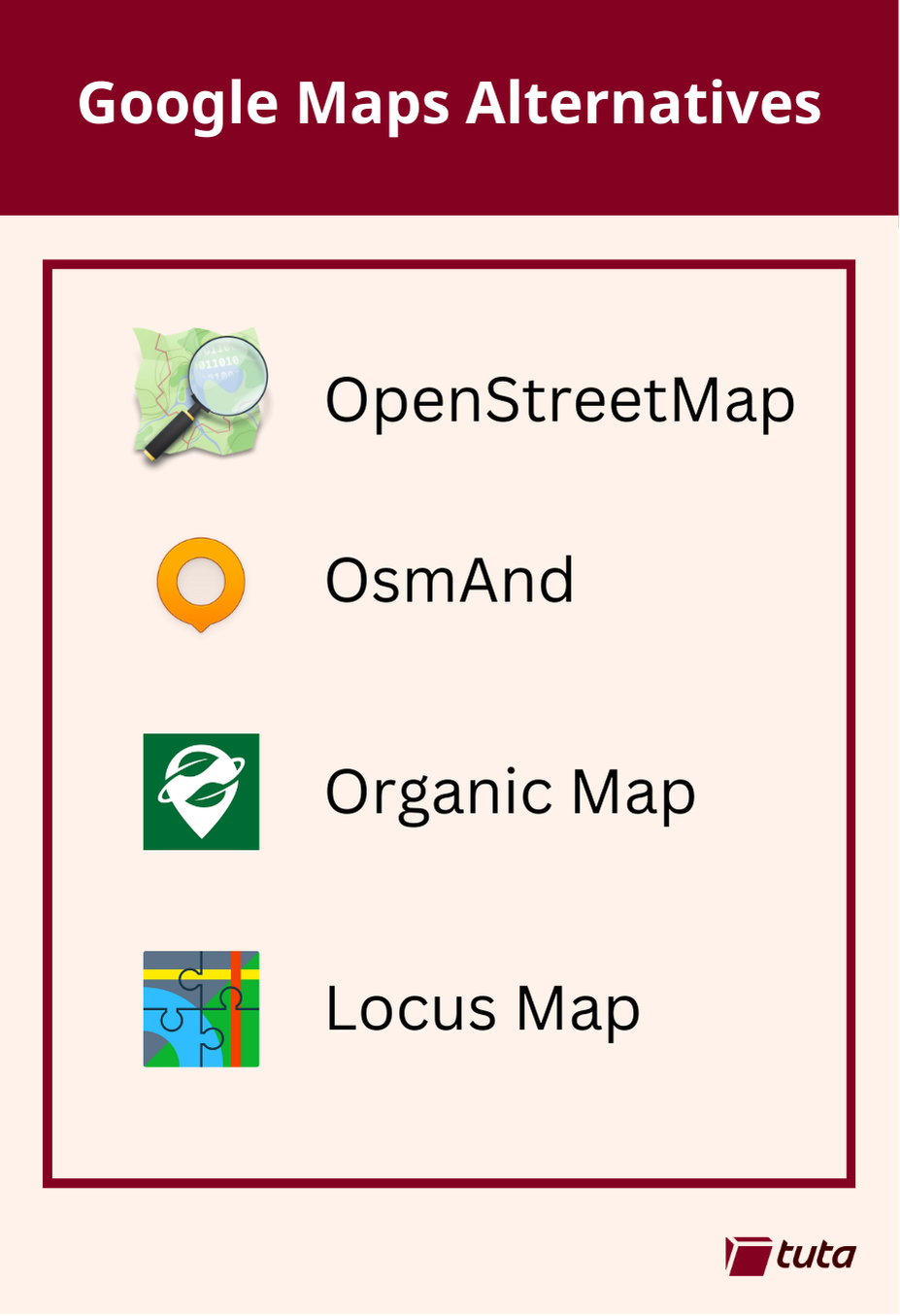

>repeated media reports of Google’s disregard for the privacy of the general public led to a push for open source, community driven alternatives to Google Maps. The biggest contender, now used by Google’s direct competitors and open source projects alike is OpenStreetMap.

1. OsmAnd

>

>OsmAnd is a fantastic choice when searching for an alternative to Google Maps. It is available on both Android and iOS devices with both free and paid subscription options. Free accounts have full access to maps and navigation features, but choosing a paid subscription will allow you unlimited map downloads and increases the frequency of updates.

>

>All subscriptions can take advantage of turn-by-turn navigation, route planning, map markers, and all the favorite features you expect from a map and navigation app in 2024. By making the jump to a paid subscription you get some extra features like topo maps, nautical depths, and even point-of-interest data imported from Wikipedia.

2. Organic Maps

>

>Organic Maps is a great choice primarily because they offer support for all features of their iOS and Android apps completely offline. This means if you have an old phone laying around, you can install the app, download the maps you need and presto! You now have an indepth digital map in the palm of your hand without needing to worry about losing or damaging your primary mobile device when exploring the outdoors.

>

>Organic Maps tugs our heartstrings by their commitment to privacy. The app can run entirely without a network connection and comes with no ads, tracking, data collection, and best of all no registration.

3. Locus Maps

>

>Our third, and last recommendation today is Locus Maps. Locus Maps is built by outdoor enthusiasts for the same community. Hiking, biking, and geocaching are all mainstays of the Locus App, alongside standard street map navigation as well.

>

>Locus is available in its complete version for Android, and an early version is available for iOS which is continuing to be worked on. Locus Maps offers navigation, tracking and routes, and also information on points-of-interest you might visit or stumble upon during your adventures.

Google ads push fake Google Authenticator site installing malware | The ad displays “google.com” and “https://www.google.com” as the click URL, and the advertiser’s identity is verified by Google

>Google has fallen victim to its own ad platform, allowing threat actors to create fake Google Authenticator ads that push the DeerStealer information-stealing malware.

>In a new malvertising campaign found by Malwarebytes, threat actors created ads that display an advertisement for Google Authenticator when users search for the software in Google search.

>What makes the ad more convincing is that it shows 'google.com' and "https://www.google.com" as the click URL, which clearly should not be allowed when a third party creates the advertisement.

>We have seen this very effective URL cloaking strategy in past malvertising campaigns, including for KeePass, Arc browser, YouTube, and Amazon. Still, Google continues to fail to detect when these imposter ads are created.

>Malwarebytes noted that the advertiser's identity is verified by Google, showing another weakness in the ad platform that threat actors abuse.

>When the download is executed, it will launch the DeerStealer information-stealing malware, which steals credentials, cookies, and other information stored in your web browser.

>Users looking to download software are recommended to avoid clicking on promoted results on Google Search, use an ad blocker, or bookmark the URLs of software projects they typically use.

>Before downloading a file, ensure that the URL you're on corresponds to the project's official domain. Also, always scan downloaded files with an up-to-date AV tool before executing.

- @ForgottenFlux@lemmy.world to

English

English - •

- apnews.com

- •

- 1M

- •

>Filed in 2022, the Texas lawsuit said that Meta was in violation of a state law that prohibits capturing or selling a resident’s biometric information, such as their face or fingerprint, without their consent.

>The company announced in 2021 that it was shutting down its face-recognition system and delete the faceprints of more than 1 billion people amid growing concerns about the technology and its misuse by governments, police and others.

>Texas filed a similar lawsuit against Google in 2022. Paxton’s lawsuit says the search giant collected millions of biometric identifiers, including voiceprints and records of face geometry, through its products and services like Google Photos, Google Assistant, and Nest Hub Max. That lawsuit is still pending.

>The $1.4 billion is unlikely to make a dent in Meta’s business. The Menlo Park, California-based tech made a profit of $12.37 billion in the first three months of this year, Its revenue was $36.46 billion, an increase of 27% from a year earlier.

>The Kids Online Safety Act (KOSA) easily passed the Senate today despite critics' concerns that the bill may risk creating more harm than good for kids and perhaps censor speech for online users of all ages if it's signed into law.

>KOSA received broad bipartisan support in the Senate, passing with a 91–3 vote alongside the Children’s Online Privacy Protection Action (COPPA) 2.0. Both laws seek to control how much data can be collected from minors, as well as regulate the platform features that could harm children's mental health.

>However, while child safety advocates have heavily pressured lawmakers to pass KOSA, critics, including hundreds of kids, have continued to argue that it should be blocked.

>Among them is the American Civil Liberties Union (ACLU), which argues that "the House of Representatives must vote no on this dangerous legislation."

>If not, potential risks to kids include threats to privacy (by restricting access to encryption, for example), reduced access to vital resources, and reduced access to speech that impacts everyone online, the ACLU has alleged.

>The ACLU recently staged a protest of more than 300 students on Capitol Hill to oppose KOSA's passage. Attending the protest was 17-year-old Anjali Verma, who criticized lawmakers for ignoring kids who are genuinely concerned that the law would greatly limit their access to resources online.

>"We live on the Internet, and we are afraid that important information we’ve accessed all our lives will no longer be available," Verma said. "We need lawmakers to listen to young people when making decisions that affect us."

- @ForgottenFlux@lemmy.world to

English

English - •

- techcrunch.com

- •

- 1M

- •

>In a new academic paper, researchers from the Belgian university KU Leuven detailed their findings when they analyzed 15 popular dating apps. Of those, Badoo, Bumble, Grindr, happn, Hinge and Hily all had the same vulnerability that could have helped a malicious user to identify the near-exact location of another user, according to the researchers.

>While neither of those apps share exact locations when displaying the distance between users on their profiles, they did use exact locations for the “filters” feature of the apps. Generally speaking, by using filters, users can tailor their search for a partner based on criteria like age, height, what type of relationship they are looking for and, crucially, distance.

>To pinpoint the exact location of a target user, the researchers used a novel technique they call “oracle trilateration.”

>The good news is that all the apps that had these issues, and that the researchers reached out to, have now changed how distance filters work and are not vulnerable to the oracle trilateration technique.

>Neither Badoo, which is owned by Bumble, nor Hinge responded to a request for comment.

- @ForgottenFlux@lemmy.world to

English

English - •

- techcrunch.com

- •

- 1M

- •

>A federal district court in New York has ruled that U.S. border agents must obtain a warrant before searching the electronic devices of Americans and international travelers crossing the U.S. border.

>The ruling on July 24 is the latest court opinion to upend the U.S. government’s long-standing legal argument, which asserts that federal border agents should be allowed to access the devices of travelers at ports of entry, like airports, seaports and land borders, without a court-approved warrant.

>“The ruling makes clear that border agents need a warrant before they can access what the Supreme Court has called ‘a window into a person’s life,’” Scott Wilkens, senior counsel at the Knight First Amendment Institute, one of the groups that filed in the case, said in a press release Friday.

>The district court’s ruling takes effect across the U.S. Eastern District of New York, which includes New York City-area airports like John F. Kennedy International Airport, one of the largest transportation hubs in the United States.

>Critics have for years argued that these searches are unconstitutional and violate the Fourth Amendment, which protects against unwarranted searches and seizures of a person’s electronic devices.

>In this court ruling, the judge relied in part on an amicus brief filed on the defendant’s behalf that argued the unwarranted border searches also violate the First Amendment on grounds of presenting an “unduly high” risk of a chilling effect on press activities and journalists crossing the border.

>With several federal courts ruling on border searches in recent years, the issue of their legality is likely to end up before the Supreme Court, unless lawmakers act sooner.

>After all, the privacy of our mind may be the only privacy we have left.

- @ForgottenFlux@lemmy.world to

English

English - •

- www.eff.org

- •

- 2M

- •

>The EU Council has now passed a 4th term without passing its controversial message-scanning proposal. The just-concluded Belgian Presidency failed to broker a deal that would push forward this regulation, which has now been debated in the EU for more than two years.

>

>For all those who have reached out to sign the “Don’t Scan Me” petition, thank you—your voice is being heard. News reports indicate the sponsors of this flawed proposal [withdrew it because they couldn’t get a majority](https://netzpolitik.org/2024/victory-for-now-no-majority-on-chat-control-for-belgium/) of member states to support it.

>

>Now, it’s time to stop attempting to compromise encryption in the name of public safety. EFF has [opposed](https://www.eff.org/deeplinks/2022/06/eus-new-message-scanning-regulation-must-be-stopped) this legislation from the start. Today, [we’ve published a statement](https://edri.org/wp-content/uploads/2024/07/Statement_-The-future-of-the-CSA-Regulation.pdf), along with EU civil society groups, explaining why this flawed proposal should be withdrawn.

>

>The scanning proposal would create “detection orders” that allow for messages, files, and photos from hundreds of millions of users around the world to be compared to government databases of child abuse images. At some points during the debate, EU officials even suggested using AI to scan text conversations and predict who would engage in child abuse. That’s one of the reasons why some opponents have labeled the proposal “chat control.”

>

>There’s [scant public support](https://edri.org/our-work/press-release-poll-youth-in-13-eu-countries-refuse-surveillance-of-online-communication/) for government file-scanning systems that break encryption. Nor is there [support in EU law](https://www.eff.org/deeplinks/2024/03/european-court-human-rights-confirms-undermining-encryption-violates-fundamental). People who need secure communications the most—lawyers, journalists, human rights workers, political dissidents, and oppressed minorities—will be the most affected by such invasive systems. Another group harmed would be those whom the EU’s proposal claims to be helping—abused and at-risk children, who need to securely communicate with trusted adults in order to seek help.

>

>The right to have a private conversation, online or offline, is a bedrock human rights principle. When surveillance is used as an investigation technique, it must be targeted and coupled with strong judicial oversight. In the coming EU council presidency, which will be led by Hungary, leaders should drop this flawed message-scanning proposal and focus on law enforcement strategies that respect peoples’ privacy and security.

>

>Further reading:

>- [EFF and EDRi Coalition Statement on the Future of the CSA Regulation](https://edri.org/wp-content/uploads/2024/07/Statement_-The-future-of-the-CSA-Regulation.pdf)

- @ForgottenFlux@lemmy.world to

English

English - •

- www.eff.org

- •

- 2M

- •

>We’ve said it before: online age verification is incompatible with privacy. Companies responsible for storing or processing sensitive documents like drivers’ licenses are likely to encounter data breaches, potentially exposing not only personal data like users’ government-issued ID, but also information about the sites that they visit.

>

>This threat is not hypothetical. This morning, 404 Media reported that a major identity verification company, AU10TIX, left login credentials exposed online for more than a year, allowing access to this very sensitive user data.

>

>A researcher gained access to the company’s logging platform, “which in turn contained links to data related to specific people who had uploaded their identity documents,” including “the person’s name, date of birth, nationality, identification number, and the type of document uploaded such as a drivers’ license,” as well as images of those identity documents. Platforms reportedly using AU10TIX for identity verification include TikTok and X, formerly Twitter.

>

>Lawmakers pushing forward with dangerous age verifications laws should stop and consider this report. Proposals like the federal Kids Online Safety Act and California’s Assembly Bill 3080 are moving further toward passage, with lawmakers in the House scheduled to vote in a key committee on KOSA this week, and California's Senate Judiciary committee set to discuss AB 3080 next week. Several other laws requiring age verification for accessing “adult” content and social media content have already passed in states across the country. EFF and others are challenging some of these laws in court.

>

>In the final analysis, age verification systems are surveillance systems. Mandating them forces websites to require visitors to submit information such as government-issued identification to companies like AU10TIX. Hacks and data breaches of this sensitive information are not a hypothetical concern; it is simply a matter of when the data will be exposed, as this breach shows.

>

>Data breaches can lead to any number of dangers for users: phishing, blackmail, or identity theft, in addition to the loss of anonymity and privacy. Requiring users to upload government documents—some of the most sensitive user data—will hurt all users.

>

>According to the news report, so far the exposure of user data in the AU10TIX case did not lead to exposure beyond what the researcher showed was possible. If age verification requirements are passed into law, users will likely find themselves forced to share their private information across networks of third-party companies if they want to continue accessing and sharing online content. Within a year, it wouldn’t be strange to have uploaded your ID to a half-dozen different platforms.

>

>No matter how vigilant you are, you cannot control what other companies do with your data. If age verification requirements become law, you’ll have to be lucky every time you are forced to share your private information. Hackers will just have to be lucky once.

- @ForgottenFlux@lemmy.world to

English

English - •

- techcrunch.com

- •

- 3M

- •

>iOS apps that build their own social networks on the back of users’ address books may soon become a thing of the past. In iOS 18, Apple is cracking down on the social apps that ask users’ permission to access their contacts — something social apps often do to connect users with their friends or make suggestions for who to follow. Now, Apple is adding a new two-step permissions pop-up screen that will first ask users to allow or deny access to their contacts, as before, and then, if the user allows access, will allow them to choose which contacts they want to share, if not all.

>For those interested in security and privacy, the addition is welcome. As security firm Mysk wrote on X, the change would be “sad news for data harvesting apps…” Others pointed out that this would hopefully prevent apps that ask repeatedly for address book access even after they had been denied. Now users could grant them access but limit which contacts they could actually ingest.

>“If you’re someone who’s buying products on the web, we know who is buying the products where, and we can leverage the data,” Grether said in a statement to the WSJ. He also said that PayPal will receive shopping data from customers using its credit card in stores.

>A PayPal spokesperson tells the WSJ that the company will collect data from customers by default while also offering the ability to opt out.

>PayPal is far from the only company to sell ads based on transaction information. In January, a study from Consumer Reports revealed that Facebook gets information about users from thousands of different companies, including retailers like Walmart and Amazon. JPMorgan Chase also announced that it’s creating an ad network based on customer spending data, while Visa is making similar moves. Of course, this doesn’t include the tracking shopping apps do to log your offline purchases, too.

- @ForgottenFlux@lemmy.world to

English

English - •

- uk.pcmag.com

- •

- 4M

- •

>Google’s AI model will potentially listen in on all your phone calls — or at least ones it suspects are coming from a fraudster.

>

>To protect the user’s privacy, the company says Gemini Nano operates locally, without connecting to the internet. “This protection all happens on-device, so your conversation stays private to you. We’ll share more about this opt-in feature later this year,” the company says.

>“This is incredibly dangerous,” says Meredith Whittaker, the president of a foundation for the end-to-end encrypted messaging app Signal.

>

>Whittaker —a former Google employee— argues that the entire premise of the anti-scam call feature poses a potential threat. That’s because Google could potentially program the same technology to scan for other keywords, like asking for access to abortion services.

>

>“It lays the path for centralized, device-level client-side scanning,” she said in a post on Twitter/X. “From detecting 'scams' it's a short step to ‘detecting patterns commonly associated w/ seeking reproductive care’ or ‘commonly associated w/ providing LGBTQ resources' or ‘commonly associated with tech worker whistleblowing.’”

>With the latest version of Firefox for U.S. desktop users, we’re introducing a new way to measure search activity broken down into high level categories. This measure is not linked with specific individuals and is further anonymized using a technology called OHTTP to ensure it can’t be connected with user IP addresses.

>

>Let’s say you’re using Firefox to plan a trip to Spain and search for “Barcelona hotels.” Firefox infers that the search results fall under the category of “travel,” and it increments a counter to calculate the total number of searches happening at the country level.

>

>Here’s the current list of categories we’re using: animals, arts, autos, business, career, education, fashion, finance, food, government, health, hobbies, home, inconclusive, news, real estate, society, sports, tech and travel.

>

>Having an understanding of what types of searches happen most frequently will give us a better understanding of what’s important to our users, without giving us additional insight into individual browsing preferences. This helps us take a step forward in providing a browsing experience that is more tailored to your needs, without us stepping away from the principles that make us who we are.

>

>We understand that any new data collection might spark some questions. Simply put, this new method only categorizes the websites that show up in your searches — not the specifics of what you’re personally looking up.

>

>Sensitive topics, like searching for particular health care services, are categorized only under broad terms like health or society. Your search activities are handled with the same level of confidentiality as all other data regardless of any local laws surrounding certain health services.

>

>Remember, you can always opt out of sending any technical or usage data to Firefox. Here’s a step-by-step guide on how to adjust your settings. We also don’t collect category data when you use Private Browsing mode on Firefox.

>The Copy Without Site Tracking option can now remove parameters from nested URLs. It also includes expanded support for blocking over 300 tracking parameters from copied links, including those from major shopping websites. Keep those trackers away when sharing links!

- @ForgottenFlux@lemmy.world to

English

English - •

- grapheneos.org

- •

- 4M

- •

- Mullvad VPN's blog [post](https://mullvad.net/en/blog/dns-traffic-can-leak-outside-the-vpn-tunnel-on-android): DNS traffic can leak outside the VPN tunnel on Android

>Identified scenarios where the Android OS can leak DNS traffic:

>- If a VPN is active without any DNS server configured.

>- For a short period of time while a VPN app is re-configuring the tunnel or is being force stopped/crashes.

>

>The leaks seem to be limited to direct calls to the C function getaddrinfo.

>

>The above applies regardless of whether Always-on VPN and Block connections without VPN is enabled or not, which is not expected OS behavior and should therefore be fixed upstream in the OS.

>

>We’ve been able to confirm that these leaks occur in multiple versions of Android, including the latest version (Android 14).

>

>We have reported [the issues and suggested improvements](https://issuetracker.google.com/issues/337961996) to Google and hope that they will address this quickly.

- GrapheneOS 2024050900 release changelog [announcement](https://grapheneos.org/releases#2024050900):

>prevent app-based VPN implementations from leaking DNS requests when the VPN is down/connecting (this is a preliminary defense against this issue and more research is required, along with apps preventing the leaks on their end or they'll still have leaks outside of GrapheneOS)

- @ForgottenFlux@lemmy.world to

English

English - •

- bitwarden.com

- •

- 4M

- •

>Bitwarden Authenticator is a standalone app that is available for everyone, even non-Bitwarden customers.

>In its current release, Bitwarden Authenticator generates time-based one-time passwords (TOTP) for users who want to add an extra layer of 2FA security to their logins.

>There is a comprehensive roadmap planned with additional functionality.

>Available for [iOS and Android](https://bitwarden.com/download/#bitwarden-authenticator-mobile)

>The EU's Data Protection Board (EDPB) has told large online platforms they should not offer users a binary choice between paying for a service and consenting to their personal data being used to provide targeted advertising.

>In October last year, the social media giant said it would be possible to pay Meta to stop Instagram or Facebook feeds of personalized ads and prevent it from using personal data for marketing for users in the EU, EEA, or Switzerland. Meta then announced a subscription model of €9.99/month on the web or €12.99/month on iOS and Android for users who did not want their personal data used for targeted advertising.

>At the time, Felix Mikolasch, data protection lawyer at noyb, said: "EU law requires that consent is the genuine free will of the user. Contrary to this law, Meta charges a 'privacy fee' of up to €250 per year if anyone dares to exercise their fundamental right to data protection."

Related: [Privacy Guides recommendation of Ente Auth](https://www.privacyguides.org/en/multi-factor-authentication/#ente-auth)

More Information: [GrapheneOS Mastodon account](https://grapheneos.social/@GrapheneOS/112204428984003954)

- @ForgottenFlux@lemmy.world to

English

English - •

- www.forbes.com

- •

- 6M

- •

If the linked article has a paywall, you can access this archived version instead: https://archive.ph/zyhax

>The court orders show the government telling Google to provide the names, addresses, telephone numbers and user activity for all Google account users who accessed the YouTube videos between January 1 and January 8, 2023. The government also wanted the IP addresses of non-Google account owners who viewed the videos.

>“This is the latest chapter in a disturbing trend where we see government agencies increasingly transforming search warrants into digital dragnets. It’s unconstitutional, it’s terrifying and it’s happening every day,” said Albert Fox-Cahn, executive director at the Surveillance Technology Oversight Project. “No one should fear a knock at the door from police simply because of what the YouTube algorithm serves up. I’m horrified that the courts are allowing this.” He said the orders were “just as chilling” as geofence warrants, where Google has been ordered to provide data on all users in the vicinity of a crime.

>The decision followed a New York Times report this month that G.M. had, for years, been sharing data about drivers’ mileage, braking, acceleration and speed with the insurance industry. The drivers were enrolled — some unknowingly, they said — in OnStar Smart Driver, a feature in G.M.’s internet-connected cars that collected data about how the car had been driven and promised feedback and digital badges for good driving.

If the article link contains a paywall, you can consider reading this alternative article instead: '[GM Stops Sharing Driver Data With Brokers Amid Backlash](https://arstechnica.com/cars/2024/03/gm-stops-sharing-driver-data-with-brokers-amid-backlash/)' on Ars Technica.

>The nonprofit organization that supports the Firefox web browser said today it is winding down its new partnership with Onerep, an identity protection service recently bundled with Firefox that offers to remove users from hundreds of people-search sites. The move comes just days after a report by KrebsOnSecurity forced Onerep’s CEO to admit that he has founded dozens of people-search networks over the years.

>Mozilla only began bundling Onerep in Firefox last month, when it announced the reputation service would be offered on a subscription basis as part of Mozilla Monitor Plus.

- @ForgottenFlux@lemmy.world to

English

English - •

- www.eff.org

- •

- 6M

- •

>Cars collect a lot of our personal data, and car companies disclose a lot of that data to third parties. It’s often unclear what’s being collected, and what's being shared and with whom. A recent New York Times article highlighted how data is shared by G.M. with insurance companies, sometimes without clear knowledge from the driver. If you're curious about what your car knows about you, you might be able to find out. In some cases, you may even be able to opt out of some of that sharing of data.

>It puts a lot of features at the fingertips of the faithful, including the ability to filter whole neighborhoods by religion, ethnicity, “Hispanic country of origin,” “assimilation,” and whether there are children living in the household.

>Its core function is to produce neighborhood maps and detailed tables of data about people from non-Anglo-European backgrounds, drawn from commercial sources typically used by marketing and data-harvesting firms.

>training videos produced by users show the extent to which evangelical groups are using sophisticated ways to target non-Christian communities, with questionable safeguards around security and privacy.

>In one instance, he points to the sharable note-taking function and suggests leaving information for each household, such as “Daughter left for college” and “Mother is in the hospital.”

>increasingly popular among Christian supremacist groups, prayerwalking calls on believers to wage “violent prayer” (persistently and aggressively channeling emotions of hatred and anger against Satan), engage in “spiritual mapping” (identifying areas where evil is at work, such as the darkness ruling over an abortion clinic, or the “spirit of greed” ruling over Las Vegas), and conduct prayerwalking (roaming the streets in groups, “praying on-site with insight”).

>newly arrived refugees might well find a knock on the door from strangers with knowledge of their personal circumstances distressing—and that’s before these surprise visitors even begin to attempt to convert them.

>placing people of different ethnic and religious backgrounds on easy-to-access databases is a dangerous road to go down

- @ForgottenFlux@lemmy.world to

English

English - •

- www.wired.com

- •

- 6M

- •

>But this policy may struggle to address the camera problem at large, as the company has already required hosts to disclose the indoor cameras, and guests have sometimes reported hidden and undisclosed cameras.

>The new rules also require hosts to disclose to guests whether they are using noise decibel monitors or outdoor cameras before guests book.

>“This just emphasizes the fact that surveillance always gives a huge amount of power to whoever controls the camera system,” says Fox Cahn. “When it's used in a property you're renting, whether it's a landlord or an Airbnb, it's ripe for abuse.”

- @ForgottenFlux@lemmy.world to

English

English - •

- 7M

- •

cross-posted from: https://lemmy.world/post/12202255

> Announcement from the Proton team on [Reddit](https://www.reddit.com/r/ProtonDrive/comments/1avicc5/the_free_proton_drive_plan_is_getting_5x_the/) ([Libreddit link](https://farside.link/libreddit/r/ProtonDrive/comments/1avicc5/the_free_proton_drive_plan_is_getting_5x_the/)):

>

> >Today, we’re increasing file storage limits on the free plan.

> >

> >Instead of sharing 1 GB between files and email, you’ll now have:

> >

> > 5 GB for Proton Drive

> >

> > 1 GB for Proton Mail

>

> Additional context: For Proton Drive, you now start with 2 GB and for Proton Mail, you start with 500 MB. After signing up for the Free plan, you can unlock the maximum storage allowance on each service thus:

>

> You can boost your Proton Mail storage from 500 MB to 1 GB by completing four [account setup actions](https://proton.me/support/get-started-mail).

>

> You can boost your Proton Drive storage from the default 2 GB to 5 GB by completing three [tasks](https://proton.me/support/more-storage-proton-drive).

>

>

- @ForgottenFlux@lemmy.world to

English

English - •

- themarkup.org

- •

- 7M

- •

Here’s a condensed version of all 20 tips in one place. Click on any individual tip to learn more.

*Note: Not all tips apply to everyone. Assess your [threat model](https://ssd.eff.org/module/your-security-plan) before implementing.*

- [Use a privacy protector](https://mrkup.org/gj-toc-1) on your phone and computer screens to protect your activity from wandering eyes.

- [Download a privacy-protecting web browser](https://mrkup.org/gj-toc-2) that blocks not only ads, but cookies, trackers, and more.

- [Install software updates](https://mrkup.org/gj-toc-3) as soon as they’re available to stay secure and avoid being hacked.

- [Activate two-factor authentication](https://mrkup.org/gj-toc-4) across all of your accounts, ideally using authenticator apps or security keys.

- [Don’t share your current location](https://mrkup.org/gj-toc-5) on social media—at least, until after you’ve left it.

- [Use a password manager](https://mrkup.org/gj-toc-6) to ensure you have a secure, unique password for each of your accounts.

- [Upgrade your wireless router](https://mrkup.org/gj-toc-7) hardware, especially if yours is from before 2020. Your connection will be more secure thanks to new privacy standards.

- [Get a burner phone number](https://mrkup.org/gj-toc-8) in case you need an extra level of privacy when working, signing up for shopper rewards programs, or even using dating apps.

- [Review your social media privacy settings](https://mrkup.org/gj-toc-9) to stop your account from being shown to people you may not want seeing it.

- [Ditch Google Maps](https://mrkup.org/gj-toc-10) for an alternative. Even switching to Apple Maps can reduce how much of your data is sent to advertisers.

- [Browse the web in “private” or “incognito” mode](https://mrkup.org/gj-toc-11) to reduce the amount of cookies you’re tracked by and keep your accounts secure. Especially if you’re using a public computer.

- [Activate a little-known Screen Time setting](https://mrkup.org/gj-toc-12), if you’re an iPhone user, to decrease the chance of your data being taken if your phone gets lost or stolen.

- [Keep your kids' info off the internet](https://mrkup.org/gj-toc-13) if you’re a parent. That’s it. That’s the tip.

- [Keep your info off the internet](https://mrkup.org/gj-toc-14) by using services like DeleteMe, that remove your data from data brokers’ hands.

- [Don’t forget about real-world privacy](https://mrkup.org/gj-toc-15), like using cash and shredding your mail before you throw it away.

- [Try using a “virtual machine”](https://mrkup.org/gj-toc-16) the next time you want to open a potentially sketchy document or software.

- [Implement a written or numeric passcode](https://mrkup.org/gj-toc-17), rather than using FaceID or other face recognition technology, to unlock your phone.

- [Lie about your birthday!](https://mrkup.org/gj-toc-18) To retailers in particular. They don’t need to know.

- [Fake your answers to account security questions](https://mrkup.org/gj-toc-19) to keep hackers from finding and using your real info. This can also stop some pretty personal data from getting exposed in a potential breach.

- [Say goodbye to Gmail, Hotmail, and the like](https://mrkup.org/gj-toc-20) by switching to a more private email provider.

Actions like these—however small they may feel—do make a difference. By implementing just a few of these privacy tips, your accounts could be safer and less of your data could end up with advertisers.

If thinking about protecting your privacy online makes you feel anxious, overwhelmed, or resigned, you aren’t alone.

Nearly 70 percent of Americans felt overwhelmed solely by the number of passwords they have to track, according to a 2023 [Pew Research Center survey](https://www.pewresearch.org/internet/2023/10/18/how-americans-view-data-privacy/). Just over 60 percent aren’t sure that any steps they take when managing their privacy online make a difference, the same survey found.

That’s why, this January, The Markup published one practical privacy tip a day that Markup staffers or readers actually use in their own lives.

We called it “[Gentle January](https://themarkup.org/series/gentle-january)” because the tips are a mix of calming (did you know you can stop tracking all those passwords yourself?), whimsical (yes yes, we do teach you to fake some things), or downright practical (turns out, you should install those software updates).

*The above excerpts are taken from [this](https://themarkup.org/gentle-january/2024/01/31/overwhelmed-by-digital-privacy-reset-with-these-practical-tips) article by The Markup.*

*Please note that not all of the software suggestions provided in the article are necessarily the best options from a privacy standpoint. For example, the article mentions using Google Authenticator for two-factor authentication (2FA) instead of Aegis or Ente Auth. If you're looking for privacy tools, you may find the following resources helpful:*

[Privacy Guides](https://www.privacyguides.org/en/tools/)

[Avoid the Hack](https://avoidthehack.com/tools)

- @ForgottenFlux@lemmy.world to

English

English - •

- adguard.com

- •

- 7M

- •

TL;DR version:

- Mobile carriers collect and sell customer data for profit.

- Carriers use various methods to collect data, including default settings that enroll customers in data collection programs without their knowledge or consent, and opt-in programs that require explicit consent but may use misleading language or design to trick users into agreeing.

- Major mobile carriers, such as AT&T, Verizon, and T-Mobile, collect customer data through their privacy policies, which often go unread by consumers.

- Carriers collect various data, including web browsing history, app usage, device location, demographic information, and more. Carriers also combine data collected from customers with information from external sources, such as credit reports, marketing mailing lists, and social media posts.

- They use this data to create models and inferences about customers' interests and buying intentions, which they then share with advertisers for targeted advertising.

- Individuals can choose to opt out of data collection initiatives, utilize Virtual Private Networks (VPNs) to limit data accessibility, and change to alternate Domain Name System (DNS) servers to reduce the amount of data gathered.

- @ForgottenFlux@lemmy.world to

English

English - •

- thenewoil.org

- •

- 8M

- •

The article lists settings to change on Android 14 and iOS 17.

According to the author:

Recommended setting changes reduce the amount of data submitted to device manufacturers, cell carriers, or app developers and improve device security against common threats, such as those posed by nosy people who find the device unattended or by common malware.

By enabling all of these settings, you are significantly reducing the amount of tracking and data collection these devices perform, but keep in mind that you are not completely eliminating it.

Summary:

The [article](https://www.privacyguides.org/en/basics/common-misconceptions/) debunks several common misconceptions related to software security and privacy:

**"Open-source software is always secure" or "Proprietary software is more secure"**

First, it clarifies that whether software is open-source or proprietary does not directly impact its security. Open-source software can be more secure due to transparency and third-party audits, but there is no guaranteed correlation. Similarly, proprietary software can be secure despite being closed-source.

**"Shifting trust can increase privacy"**

Second, the concept of "shifting trust" is discussed, emphasizing that merely transferring trust from one entity to another does not ensure complete security. Instead, users should combine various tools and strategies to protect their data effectively.

**"Privacy-focused solutions are inherently trustworthy"**

Third, focusing only on privacy policies and marketing claims of privacy-focused solutions can be misleading. Users should prioritize technical safeguards, such as end-to-end encryption, over trusting providers based on their stated intentions alone.

**"Complicated is better"**

Lastly, the complexity of privacy solutions is addressed, encouraging users to focus on practical, achievable methods rather than unrealistic, convoluted approaches.